Two papers from Skoltech Computer Vision group led by Professor Victor Lempitsky have been accepted to NIPS – the largest global forum on artificial intelligence and machine learning. The 2016 NIPS forum will take place in Barcelona on December 5-11. The papers, which passed a rigorous selection, are devoted to two applications of deep neural networks for image analysis.

Skoltech Computer Vision Group is developing computer systems to retrieve and organize the information contained in images of various origins. For this purpose, group members develop new machine learning techniques that can be adapted to the visual information diversity in a modern world. One of these classes of methods actively studied in Lempitsky group are deep learning approaches.

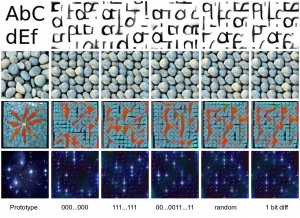

The first paper that will be presented on NIPS is co-authored by Skoltech Master’s graduate Oleg Grinchuk and PhD student Vadim Lebedev. The work proposes an alternative to existing classes of visual markers ( such as QR codes and bar codes). Essentially, the researchers propose a novel approach to visual markers design. The marker design process involves a deep neural network that converts input bit sequences into visual markers. Another neural network is trained to recover the input bit sequence from the distorted markers. The learning process configures both the neural networks in parallel, to optimize both the robustness of the recognition and the aesthetic quality of the markers. As a result designed by deep learning markers can be done in any visual style or brand including the style of a particular organization. Thus, the new markers look quite differently from the usual black and white QR-codes and other existing systems on the market. Markers, developed in Lempitsky’s group are bright and colorful, but they are still coding information. Examples of such markers can be found on the project page.

The first paper that will be presented on NIPS is co-authored by Skoltech Master’s graduate Oleg Grinchuk and PhD student Vadim Lebedev. The work proposes an alternative to existing classes of visual markers ( such as QR codes and bar codes). Essentially, the researchers propose a novel approach to visual markers design. The marker design process involves a deep neural network that converts input bit sequences into visual markers. Another neural network is trained to recover the input bit sequence from the distorted markers. The learning process configures both the neural networks in parallel, to optimize both the robustness of the recognition and the aesthetic quality of the markers. As a result designed by deep learning markers can be done in any visual style or brand including the style of a particular organization. Thus, the new markers look quite differently from the usual black and white QR-codes and other existing systems on the market. Markers, developed in Lempitsky’s group are bright and colorful, but they are still coding information. Examples of such markers can be found on the project page.

Vadim Lebedev: “When we create a visual marker, we take an input bit sequence (64-256 bits), and a special neural network draws a visual marker based on this code. Then you can print it, hang on the wall and take a photo. The second neural network is needed to decode the information from this image. We learned how to model the process that is happening with an image during printing and under taken a photo. It allowed us to train the two networks jointly and at the same time. That’s why our approach is very flexible and we can adapt markers for specific reading devices and usage conditions.”

For now, this technology has a limit in the amount of information that can be encoded (which is less than in QR-codes). Nevertheless, such visual markers can be already used to encode dynamic links and to facilitate user indoor navigation.

The second work accepted to NIPS, was done by the PhD student Evgeniya Ustinova and is concerned with training neural networks that embed data into high-dimensional vectorial spaces. Such embeddings are widely used and have a range of applications, e.g. in image search and retrieval. Ustinova and Lempitsky were able to develop new criteria (loss function) that can be used to train such neural networks and has an advantage over previously proposed criteria in having less number of tunable parameters and producing better embeddings resulting in more accurate image search.

Deep neural networks currently demonstrate state-of-the-art performance in many domains of large-scale machine learning, such as computer vision, speech recognition, text processing, etc. Skoltech’s Computer Vision group is focused on the methods and approaches concerned with the application of deep neural networks to visual information. Among recent group achievements there is an algorithm that can change the direction of the gaze in images and video in real time, an algorithm for image stylization in real time and a method for improved object detection in microscopy images developed jointly with colleagues from Oxford. The technology developed in Computer Vision group has been successfully commercialized. E.g. recently the Eye Contact Videoconferencing Technology was licensed to the RealD company.

Victor Lempitsky: “With each year it becomes more difficult to publish your work at NIPS. The two papers from our group that were accepted this time have different flavours (the first is more applied and the second is somewhat more fundamental), they demonstrate the level of research in our group and they have many practical applications. I want to congratulate all group members who were involved.”

* NIPS (Advances in Neural Information Processing Systems) — is the largest global forum on artificial intelligence and machine learning. NIPS is held since 1987. The papers submitted to the conference pass rigorous competitive selection based on doubly-anonymous reviewing.

Contact information:

Skoltech Communications

+7 (495) 280 14 81