Skoltech researchers and their colleagues from smart prosthetics manufacturer Motorika and the joint Center for Cybernetic Medicine and Neuroprosthetics of Motorika and the Federal Medical and Biological Agency of Russia have devised an eye tracking-based system for assessing the comfort level of prosthetic arm users. By monitoring how often an amputee has to look at their own prosthesis — along with other metrics — the system arrives at an objective measure that tells scientists and manufacturers to what extent the device feels like a part of the body or causes additional mental workload. Available from the OSF Preprints repository, the study presents the initial results of the fourth stage of research on prosthetic sensorization. That stage involved four-month-long participation of prosthesis users to accommodate extended postsurgery rehabilitation and a one-month period of adaptation to invasive neuroprosthetics.

Smart prosthetics provide their users with sensory feedback: By virtue of electrical stimulation or other means the prosthesis enables the amputee to sense the objects they are handling and the position of the hand without looking. However, the technology still requires refinement and further development and is so far rarely used outside the lab. Among the things scientists and manufacturers of prosthetics need to improve them are better ways to objectively gauge the elusive notions of comfort, embodiment, and cognitive load in amputees using these devices to perform everyday tasks. The Skoltech researchers and their co-authors have delivered just that.

“Sometimes, test subjects may report biased experiences because of their mood or eagerness. But at home, where subjects were wearing a camera, they might not fully trust in the device to rely on it as much as they seem to in the controlled setting of the lab. Our approach allows extracting additional objective proxy measures of comfort and mental load with the potential to collect these data outside the lab,” the lead author of the study, Skoltech PhD student Mikhail Knyshenko, commented.

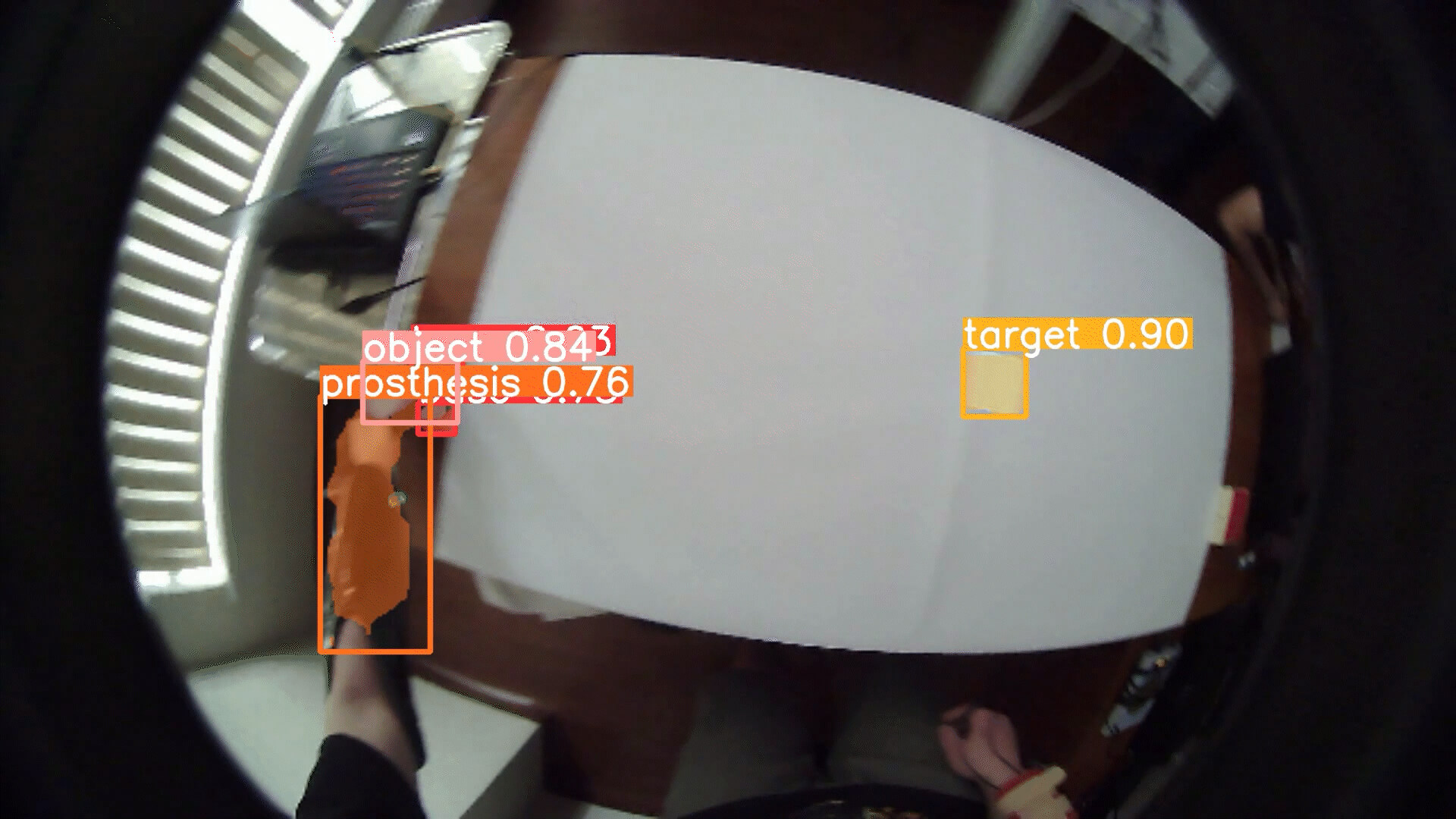

The new portable system encompasses eye-tracking glasses with two cameras capturing eye movement and a third camera tracking the environment, sensors incorporated into the prosthesis, and the software that processes the data. Computer vision algorithms are used to recognize the key objects of interest in the videos. Namely, the prosthesis, the manipulated object, and the target area for depositing the object.

In the experiment reported in the study, a test subject seated at a table repeatedly completed a task that involved grasping the object, moving it to an illuminated target area on the table, and releasing the object. In half of the trials, the subject received sensory feedback from the prosthesis in the form of electrical stimulation delivered via an electrode implanted in the arm. The stimulation evoked sensations in the phantom limb that appeared to match the parts of the prosthetic hand that were in contact with the object. The trials continued over the course of multiple days, leading the team to accumulate some 250 hours of video. At this point, the computer vision algorithm came in really handy to keep track of the objects of interest.

The sensory feedback provided to the test subject by the prosthesis was fine-tuned to him personally based on prior sessions in which the feasible range of stimulation was determined. Importantly, these preparatory sessions also involved collecting the test subject’s self-reports concerning the precise nature of the sensations produced by electrical stimulation. That way the researchers can know which kinds of stimulation map onto subjective sensations of a particular sort and which part of the “hand” the sensation seems to originate in.

“We’ve been developing invasive stimulation and prosthetic restoration methods for five years. Our goal is to steadily increase the independence and mobility of these technologies while keeping them safe and convenient for the user. In this study, for the first time, we enabled a participant to move freely and interact with real-world objects. With this added freedom in our bidirectional system, we aim to objectively evaluate how users’ relationships with their prosthetics evolve,” the principal investigator of the study, Research Scientist Gurgen Soghoyan of Skoltech Neuro, said.

“It is only through the interaction of major industrial partners with top science centers such as the Federal Center of Brain Research and Neurotechnologies of the Federal Medical Biological Agency of Russia and Skoltech that innovative science-driven projects of this kind can be implemented. This technology will enable patients to regain the functions they lost and manage phantom pain. The project’s objective is to put the product on the market,” said Yuri Matvienko, who heads the Neurotechnology Department at Motorika.

According to the team, the new portable system can now be used by researchers and prosthesis manufacturers to collect valuable data that would complement conventional self-reports and guide the development of smart prosthetics.