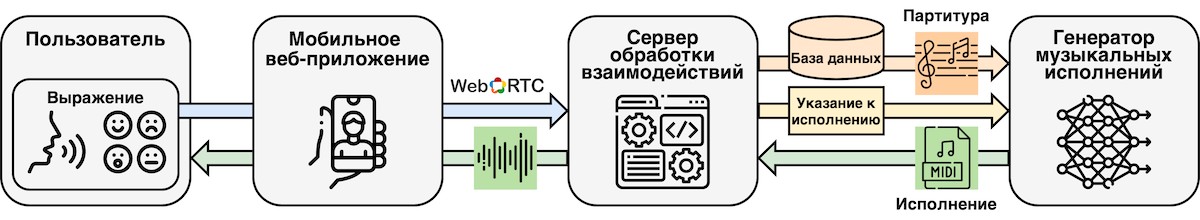

Music performance requires musical expertise and instrument training. While for many it’s a daunting task, others lack physical abilities to play the instruments. Ilya Borovik, a PhD student in computational and data science and engineering, with his co-author from Germany set an ambitious goal to make music performances more accessible to people regardless of their background. To bring pleasure from the familiar compositions, the authors introduced an app that allows tailoring music to one’s own taste through voice, facial expressions, or gestures — for example, to play it slower or as if it was a lullaby. The results are reported in Frontiers in Artificial Intelligence and Applications.

“The demo version of our system comprises an AI model, which has been trained using an open corpus of 1,067 renderings provided for 236 compositions of piano music. The model takes notated music as input and learns how to play it while predicting performance characteristics: local tempo, position, duration, and note loudness. The output is a rendering of the composition. We aimed to provide control over the model to the user, so we incorporated it into the app, which enables interaction between the model and the end user,” says Ilya Borovik.

When launching the app, a user gives access to the camera and microphone of the smartphone and starts listening to a randomly generated rendering for a composition from the database. To change the rendering, the user starts a video or audio recording. With voice or facial expressions, the model can be asked to perform the music in some other way. For example, Chopin’s Mazurkas can be turned into a lullaby.

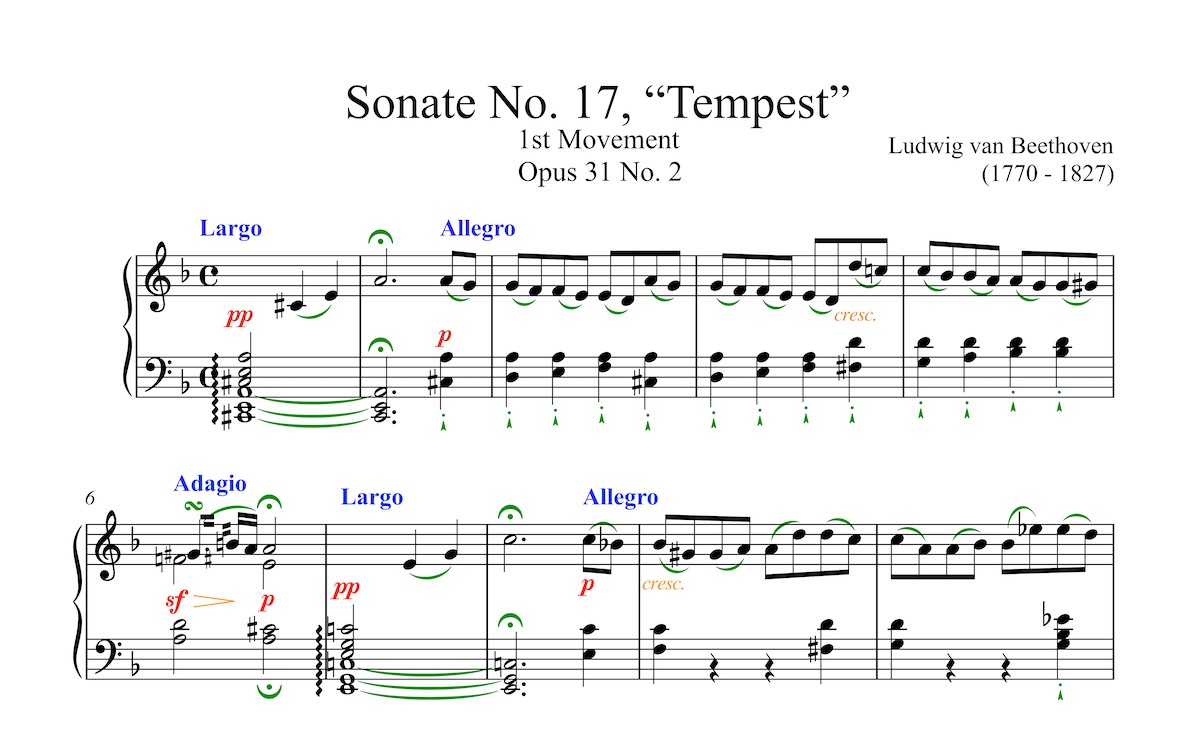

“For interacting with the model, we use performance directions that have already been put in the notes. The musical scores have written markings that guide the player on how to play music: faster, slower, louder, etc. Based on all available data, the voice commands of the user are turned into these performance directions,” adds Ilya.

Directions for excerpt from Piano Sonata No. 17 by Beethoven. Blue directions stand for tempo, red and orange for loudness, green for note accents. Source: Ilya Borovik

The project is still in progress. The research team is planning to make the user-model communication more interactive, so that the user could get the desired results in just a couple of iterations. The app’s interface will be improved, while the database of compositions will be expanded. As of now, it contains classical music, which is the world’s music heritage. At the next stage, the researchers will include orchestral music.

Contact information:

Skoltech Communications

+7 (495) 280 14 81